Bias in AI happens when these systems make decisions that unfairly favor or disadvantage certain groups of people. This can occur because the data used to train the AI contains historical or societal prejudices, or because the algorithms themselves are designed in ways that unintentionally favor specific outcomes. For the average person, this might mean that AI could give wrong or unfair results, like being denied a loan or getting misjudged by a facial recognition system.

Why Should You Care About Bias in AI?

Bias in AI isn't just a technical issue—it's something that affects all of us. Imagine you're applying for a job, and the AI used to screen resumes unfairly downgrades your application because of the school you went to or the city you live in. Or think about healthcare, where biased AI might not accurately diagnose a disease because of the way it was trained. These are real risks that can have serious consequences.

When AI systems are biased, they can reinforce existing inequalities and make them even worse. This is why it's crucial to identify and fix these biases early on, ensuring that AI technologies treat everyone fairly.

How Do We Identify Bias in AI?

Identifying bias in AI is a critical step in ensuring that these systems operate fairly and equitably. Bias can occur at various stages of AI development and deployment, and it is essential to use a combination of methods to uncover and address these biases. Here’s how this process works:

Bias Audits

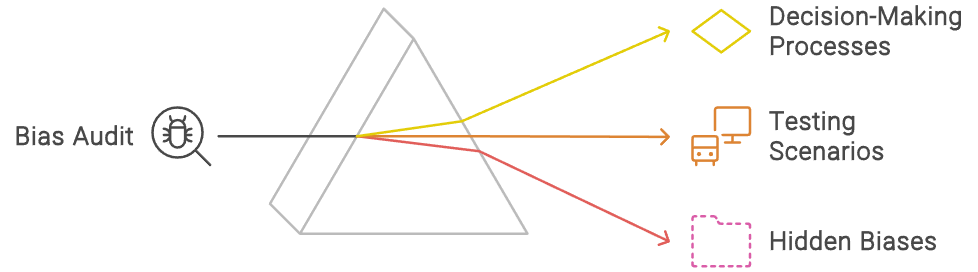

A bias audit is like a check-up for AI systems. During an audit, experts look closely at how the AI makes decisions to determine whether it treats all groups fairly. These audits involve:

- Evaluating Decision-Making Processes: Auditors examine the algorithms to see how decisions are made. They look for patterns where the AI might consistently favor one group over another.

- Testing Across Different Scenarios: Experts simulate various real-world scenarios to see how the AI performs. For example, they might input data from different demographic groups (like age, gender, or race) to see if the AI treats everyone the same way.

- Identifying Hidden Biases: Some biases aren't obvious at first glance. Auditors use specialized tools and techniques, like fairness metrics and disparate impact analysis, to uncover biases that might be lurking beneath the surface.

Testing with Diverse Data

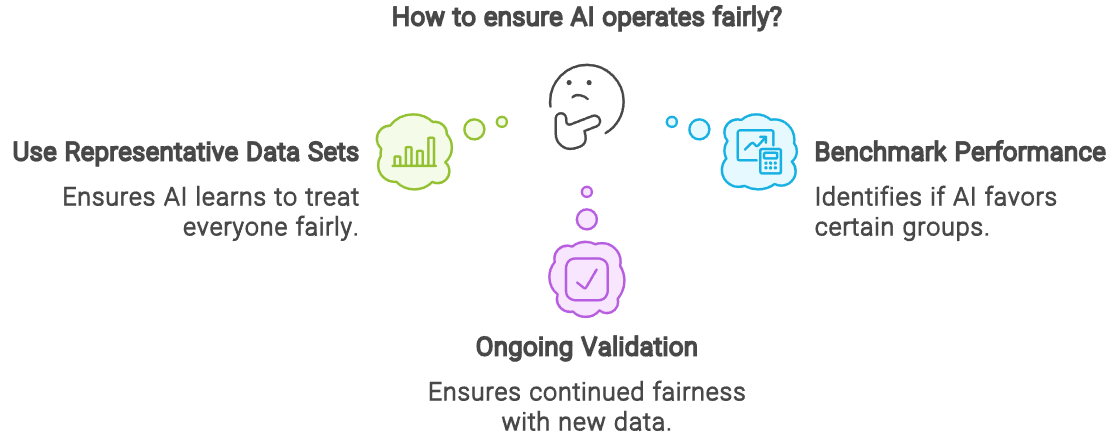

One of the most effective ways to identify bias is by using diverse data during the AI’s training and testing phases. Here's how this works:

- Representative Data Sets: By ensuring that the data used to train and test the AI includes a wide range of people, the AI is more likely to learn how to treat everyone fairly. For instance, if an AI system is used in healthcare, the training data should include medical records from people of different races, genders, and ages to avoid biased outcomes.

- Benchmarking Performance: Once trained, the AI is tested across different data sets representing various demographic groups. This helps identify whether the AI performs equally well for everyone or if it favors certain groups over others.

- Ongoing Validation: Even after deployment, it's crucial to keep testing the AI with new and diverse data to ensure that it continues to operate fairly as new types of data emerge.

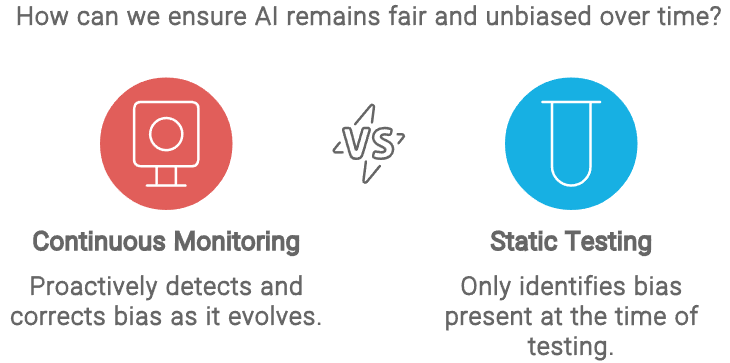

Continuous Monitoring

Bias in AI isn’t always static—it can evolve over time as the system interacts with new data. Continuous monitoring helps catch biases as they develop. This involves:

- Real-Time Analysis: Monitoring tools are set up to track the AI’s decisions in real-time, looking for any signs of bias. For example, if an AI used in hiring suddenly starts favoring applicants from a particular background more than before, the monitoring system will flag this.

- Feedback Loops: Continuous monitoring also involves gathering feedback from users. This feedback can reveal biases that might not have been detected during initial testing. For example, users might report that an AI’s recommendations are consistently less accurate for certain groups.

- Adaptive Responses: When bias is detected, the system can be adjusted on the fly to correct it. This might involve tweaking the algorithm or retraining the AI with new data to ensure it remains fair.

Strategies for Reducing Bias in AI

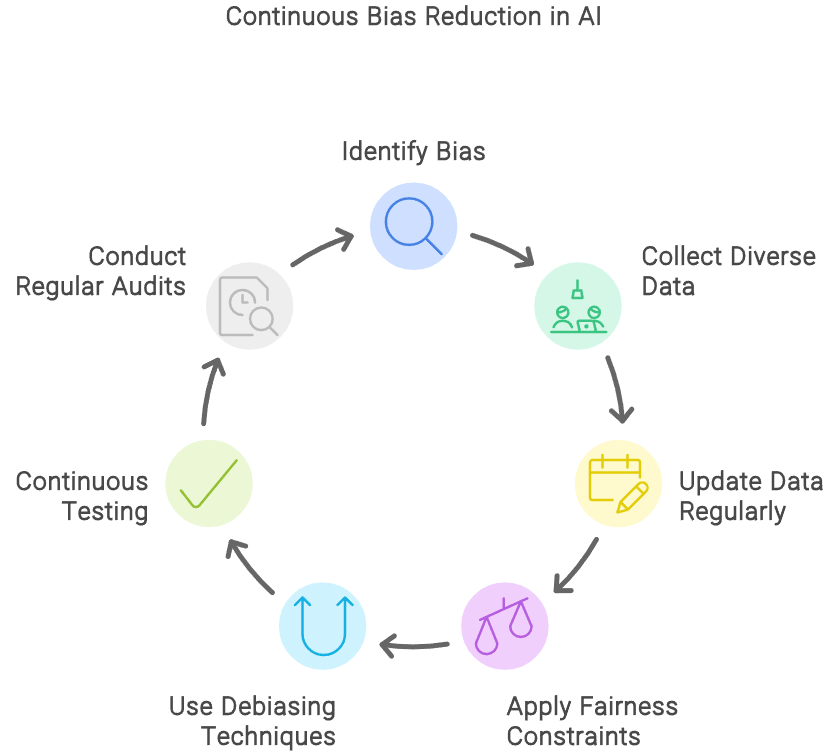

Identifying bias is just the first step—it's equally important to take effective action to reduce it. To ensure AI systems treat everyone equitably, developers should focus on using diverse data.

This involves collecting a wide range of data that represents individuals from all walks of life. For example, in the context of job recruiting, the data should include resumes from people of different genders, races, and educational backgrounds.

Additionally, it’s crucial to keep the data sets updated regularly to reflect current realities, thereby preventing the AI from relying on outdated or skewed information. When certain groups are underrepresented in the training data, developers should make a concerted effort to gather more data from these groups or employ techniques like oversampling to ensure fair representation.

Even with diverse data, the way an AI algorithm processes that data can introduce bias. To mitigate this, developers can incorporate fairness constraints into the algorithm, which are rules designed to ensure the AI treats all groups fairly. For instance, in a lending AI system, these constraints might ensure that loan approval rates remain equitable across different demographic groups.

Developers can also apply debiasing techniques, such as reweighting the data—giving more importance to underrepresented groups—or using adversarial debiasing, which involves training the AI to minimize bias while still performing its primary function.

Continuous testing and refinement of the algorithm are necessary to address any emerging biases, ensuring that the AI consistently achieves fair outcomes. Moreover, regular audits of AI systems are essential to prevent bias from creeping in over time.

As the AI processes new data, these audits should assess the system’s performance to ensure it continues to operate fairly. Including external reviewers in these audits can provide an unbiased perspective, offering insights that internal teams might miss. Additionally, keeping detailed records of audit findings helps track the AI’s performance over time and provides a clear picture of how bias is being managed.

Potential Consequences of Ignoring Bias in AI

Ignoring bias in AI can exacerbate stereotypes, inequalities, and erode public trust. Discriminatory outcomes most affect marginalized groups, leading to systemic disadvantages in healthcare, finance, and employment. Thus, organizations must prioritize ethical AI development by implementing comprehensive bias mitigation strategies and fostering a culture of fairness and accountability.

By understanding and addressing bias proactively, the AI community can create more equitable solutions that benefit society.

Sources

- Zendata. (2024, June 3). AI Bias 101: Understanding and Mitigating Bias in AI Systems. Retrieved from https://www.zendata.dev/post/ai-bias-101-understanding-and-mitigating-bias-in-ai-systems

- Leena AI. (n.d.). Mitigating Bias in AI Algorithms: Identifying, and Ensuring Fairness in AI Systems. Retrieved from https://leena.ai/blog/mitigating-bias-in-ai/

- NIST. (2021, August 12). Comments Received on A Proposal for Identifying and Managing Bias in Artificial Intelligence. Retrieved from https://www.nist.gov/artificial-intelligence/comments-received-proposal-identifying-and-managing-bias-artificial

- Turner Lee, N. (2019, May 22). Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms. Brookings Institution. Retrieved from https://www.brookings.edu/articles/algorithmic-bias-detection-and-mitigation-best-practices-and-policies-to-reduce-consumer-harms/

- NIST. (2022, March 15). Towards a Standard for Identifying and Managing Bias in Artificial Intelligence. NIST Special Publication 1270. Retrieved from https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.1270.pdf